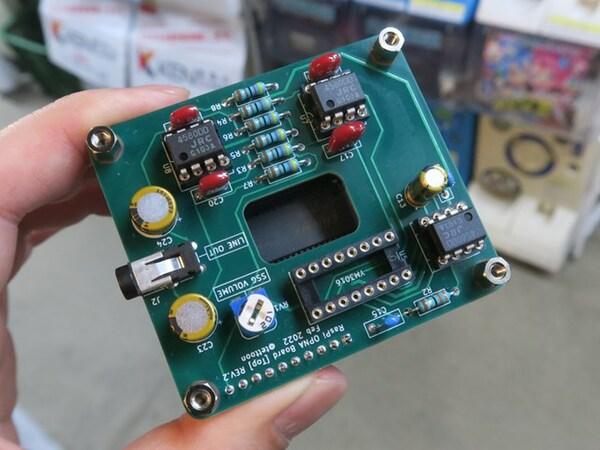

People, bicycles, other vehicles (also distinguishing between cars and trucks), traffic lights, medians (displayed as triangular cones), etc. are displayed in real time (photo taken by the author)

[Series] If there is AI * Back number links are from the related article below ● No meters, no buttons! It's been talked about recently because its market capitalization has exceeded $ 1 trillion, but I've recently been riding on the Model 3 of Tesla, a major US electric vehicle (EV). Tesla is a leader in the field of autonomous driving and is a car that makes full use of cutting-edge AI technology, so I think that it is perfect for this series, and I will write my experiences. The first thing that surprised me when I opened the door was the clean cockpit, which is not always found in ordinary cars. Not only is there no speedometer in front of you, but there is no ignition button that is always found in ordinary cars, door locks and system startup are done only with cards, and there are almost no levers or buttons related to ordinary car operations. .. All operations except the steering wheel, accelerator and brakes are performed on the large touch screen panel of the ruggedly mounted center console. It's okay if you don't mind operating your smartphone, but at this stage, it's likely that you'll be polarized if you want to ride. Software updates are also available from time to time, so I think it's more like a moving computer than a car. Since detailed settings such as the orientation of the mirror are also touch screens, it would be nice to turn on the autopilot and leave it to the car, but it is inconvenient if you want to move the wiper manually. Since you have to move your eyes to the screen and operate the screen, the fact that all operations are on the screen may be afraid that you are operating a smartphone. I sometimes saw it during the development of self-driving cars due to my work, but AI has been put to practical use so far in the images projected on the screen in real time with the car I am actually riding. I was impressed. What Tesla calls "Tesla Vision built on a deep neural network" is a combination of eight surround cameras, twelve ultrasonic sensors, and forward facing radar information that monitors all directions at the same time. It means that you can have more visibility than a human driver. In this way, people, bicycles, other vehicles (also distinguishing between cars and trucks), traffic lights, medians (displayed as traffic cones), etc. are displayed in real time (photo taken by the author). When actually running, it seems that if there is a shadow with clear light and dark on the road surface, it may be recognized as an obstacle or it may slow down. By the way, the camera mounted on Tesla monitors the surrounding situation even while parking, so when you re-board, the situation that happened while you were away will be reported as an event report. Please note that if you observe the parked Tesla, it will be seen by the returning driver (laugh) ● It is "Space Mountain" without a doorknob, but ... Besides the recognition technology by AI, what was even more surprising was the stillness. Tesla's Shin that accelerates from the state to 100 km / h in just 3.3 seconds! It is a feeling of acceleration. I also ride a turbo engine car up to 100km in about 5 seconds, but it's a bouin! It is completely different from the engine car with a feeling of acceleration. Even if I keep the speed limit, I'm worried about accelerating so quickly. It may feel like you're on Space Mountain. It feels like a vehicle of the future, but the exterior has no doorknob and the glass top from front to back is novel, but other than that, it is relatively normal, and I want a future feeling that matches the installed technology. is. I haven't driven long distances yet, so I'd like to report if I have another chance. Maki Sakamoto / Vice President of The University of Electro-Communications, Professor, Graduate School of Information Science and Engineering / Artificial Intelligence Advanced Research Center. Former director of the Japanese Society for Artificial Intelligence. Sensitive AI Co., Ltd. COO. He is in charge of AI and robots for NHK Radio 1's "Children's Science Telephone Consultation" and explains the latest research and business trends such as artificial intelligence. He is good at analyzing literary phenomena such as onomatope, five senses, sensibilities, and emotions about human language and psychology from a science and engineering perspective, and incorporating them into artificial intelligence. His books include "A book that almost understands artificial intelligence taught by Maki Sakamoto" (Ohmsha).

@DIME editorial department

![ASCII.jp New iPhone SE and M1 ULTRA [M1 ULTRA] showing the strength of Apple from another aspect](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/28/be7a8975c4e4d655f2fc444f22ef4e97_0.jpeg)

![[Breakthrough infection report] 40.3% answered that they felt that the vaccine was “ineffective”](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_9/2022/3/28/f9869be7ca5094f3e2ff937deaf76373_0.jpeg)